Authors:

(1) Nora Schneider, Computer Science Department, ETH Zurich, Zurich, Switzerland ([email protected]);

(2) Shirin Goshtasbpour, Computer Science Department, ETH Zurich, Zurich, Switzerland and Swiss Data Science Center, Zurich, Switzerland ([email protected]);

(3) Fernando Perez-Cruz, Computer Science Department, ETH Zurich, Zurich, Switzerland and Swiss Data Science Center, Zurich, Switzerland ([email protected]).

Table of Links

2 Background

3.1 Comparison to C-Mixup and 3.2 Preserving nonlinear data structure

4 Experiments and 4.1 Linear synthetic data

4.2 Housing nonlinear regression

4.3 In-distribution Generalization

4.4 Out-of-distribution Robustness

5 Conclusion, Broader Impact, and References

A Additional information for Anchor Data Augmentation

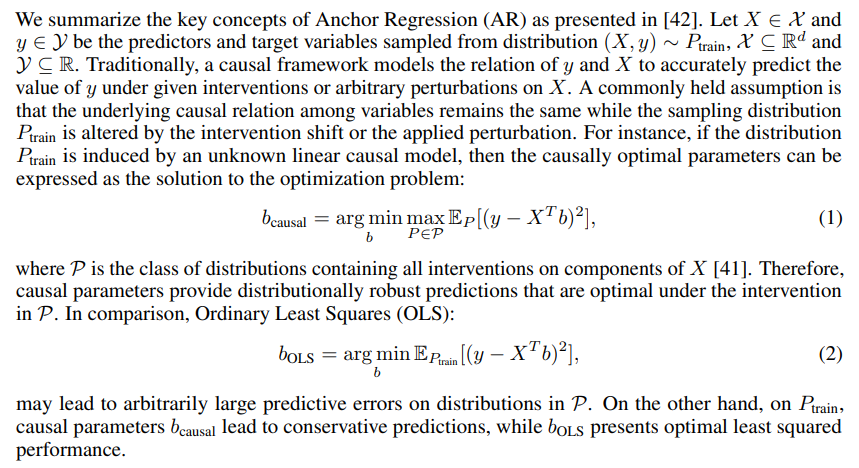

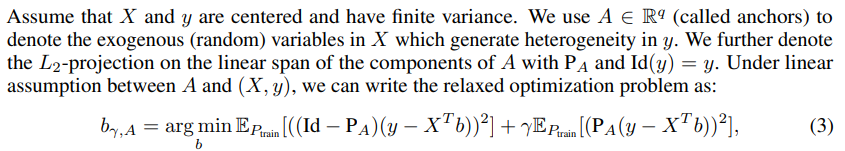

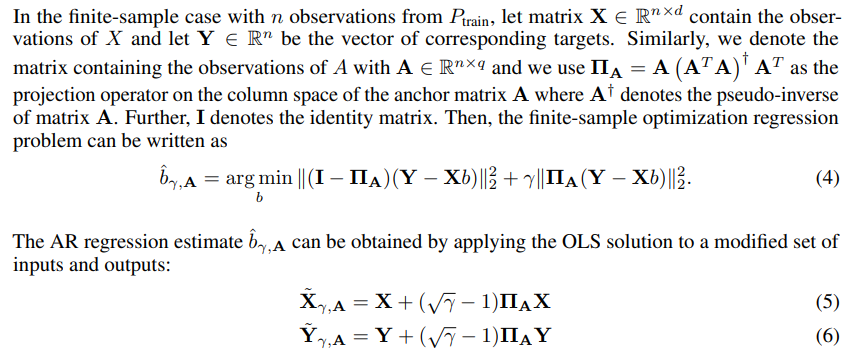

2.2 Anchor Regression

To trade-off predictive accuracy on the training distribution with distribution robustness and to enforce stability over statistical parameters, AR [4, 42] proposes to relax the regularization in the optimization problem in (1) to a smaller class of distributions P.

where γ > 0 is a hyperparameter. The first term of the AR objective in Equation 3 is the loss after “partialling out" the anchor variable, which refers to first linearly regressing out A from X and y and subsequently using OLS on the residuals. The second term is the well-known estimation objective used in the Instrumental Variable setting [11]. Therefore, for different values of γ AR interpolates between the partialling out objective (γ = 0) and the IV estimator (γ → ∞) and coincides with OLS for γ = 1. The authors show that the solution of AR optimizes a worst-case risk under shiftinterventions on anchors up to a given strength. This in turn increases the robustness of the predictions to distribution shifts at the cost of reducing the in-distribution generalization.

This paper is available on arxiv under CC0 1.0 DEED license.