Authors:

(1) Jongmin Lee, Department of Mathematical Science, Seoul National University;

(2) Ernest K. Ryu, Department of Mathematical Science, Seoul National University and Interdisciplinary Program in Artificial Intelligence, Seoul National University.

1.1 Notations and preliminaries

2.1 Accelerated rate for Bellman consistency operator

2.2 Accelerated rate for Bellman optimality opera

5 Approximate Anchored Value Iteration

6 Gauss–Seidel Anchored Value Iteration

7 Conclusion, Acknowledgments and Disclosure of Funding and References

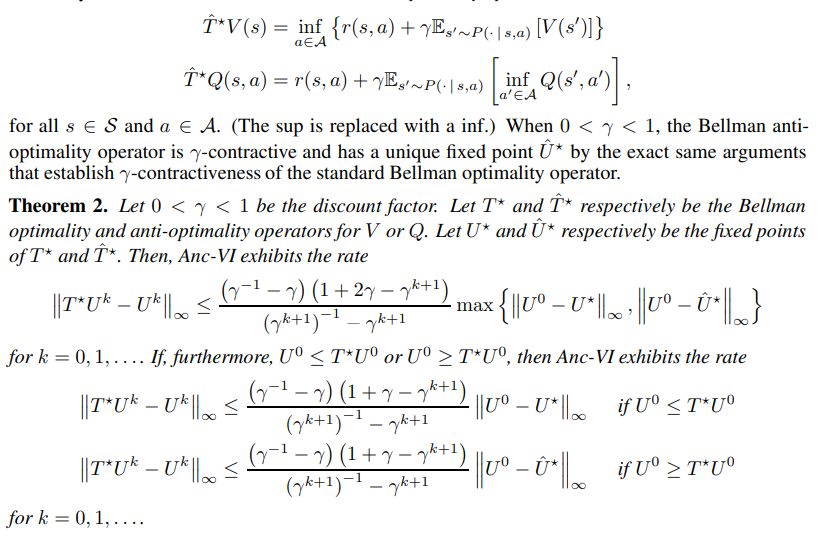

2.2 Accelerated rate for Bellman optimality opera

We now present the accelerated convergence rate of Anc-VI for the Bellman optimality operator. Our analysis uses what we call the Bellman anti-optimality operator, define

Anc-VI with the Bellman optimality operator exhibits the same accelerated convergence rate as Anc-VI with the Bellman consistency operator. As in Theorem 1, the rate of Theorem 2 also becomes O(1/k) when γ ≈ 1, while VI has a O(1)-rate.

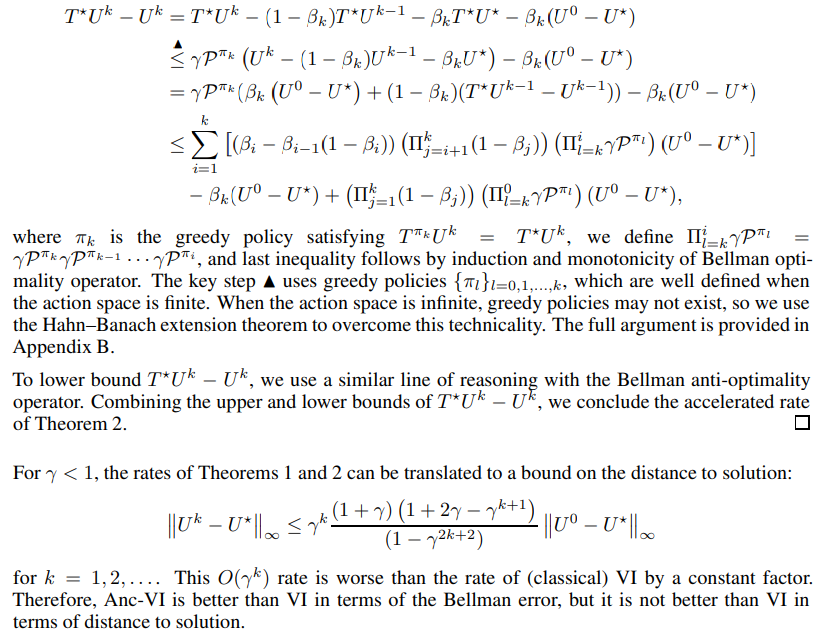

Proof outline of Theorem 2. The key technical challenge of the proof comes from the fact that the Bellman optimality operator is non-linear. Similar to the Bellman consistency operator case, we have

This paper is available on arxiv under CC BY 4.0 DEED license.